An overview of Artificial Intelligence; Application, user experience and ethical problems.

Inclusion and application of artificial intelligence has become increasingly more apparent. The utilization of an assisting technology has led to the increase in productivity while also providing a unique touch to the final image. In this paper we attempt to give an overview of state-of-the-art AI

Abstract – The inclusion and application of artificial intelligence has become increasingly more apparent. The utilization of an assisting technology has led to the increase in productivity while also providing a unique touch to the final image. In this paper we attempt to give an overview of state-of-the-art AI, while looking into the different forms AI could and is applied in 2021. We also attempted to talk about the positive and negative sides of using NN systems for general use. We found that there have been many interesting and useful new application of NNs and brought up a topic of ethics of using AI.

Want to read this printed or see the images in higher quality? Download the PDF here

This is a short project made for IMT4898 - Specialisation in Interaction Design at NTNU.

Introduction

Application of Artificial Intelligence (AI) has become increasingly popular in the recent years. In fact, the term AI has become such a popular topic of interest, that many have tried integrating it into existing systems. Many large companies have invested large sums of money into creating an AI that can translate, create, build, understand, and many other possibilities.

In terms of usability, the AI can be either a helpful tool, or a nuisance. It all depends on the context of how the AI is used. This is due to difficulty of training these AIs in such a way that they can understand the task they are given. For example, if you were to put a chatbot to look at images, it would not know how to proceed as it has not been trained for that.

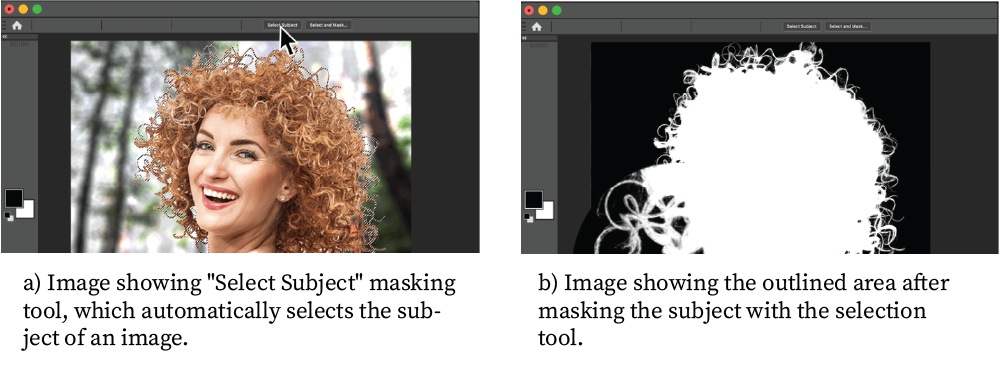

According to Merriam-Webster (2021), AI is the capability of a machine to imitate intelligent human behavior. In the year of 2021, we have become accustomed to using AIs. Or at least algorithms that perform a certain task based on preset conditions. These algorithms often come as parts of prebuilt system, for example Photoshop’s “Select Subject” algorithm (Figure 1).

Though lots of algorithms exists, each of which has their own specialized function, they are nothing more than that. Simple, singular focused tools, used only for the one task they are designed for. The combination of AI and algorithm has proven to be highly efficient and user friendly.

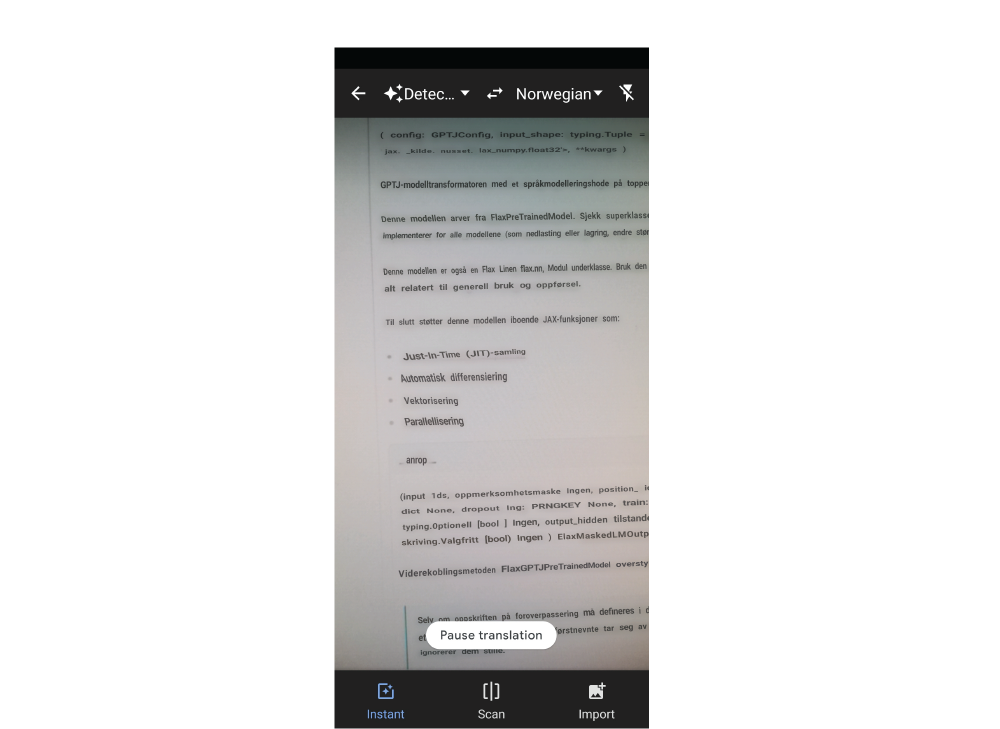

Take for example Google Translate’s camera mode, which comes with a method of converting in real-time text seen by the camera. The text is sent to a machine learning (ML) algorithm, which interpret and outputs the text back to the user. Using ML techniques, the team behind this tool had increased the amount of translation languages to 108 in 2020 (Caswell and Liang, 2020)

The improvement has increased the usability of this product, as you don’t have to write the text you would like to translate, just point the camera at the object of interest, and the tools does the rest.

In 2014, Goodfellow et al. (2014) proposed a framework called generative adversarial network (GAN) where two neural networks (NNs) contest with each other in a game. This has proven to be the basis for many new directions in which AI has become generative.

Even though training AI requires time spanning from a day to months, we are closing in on the possibility of more quickly letting machines learn. A project by a group of engineers from Tencent found that they could get training time down to six minutes (Jia et al., 2018), though the amount of Graphical Processing Units (GPUs) required for this was thousands.

AI can also be a topic difficult to explain in layman’s terms, as the convoluted nature of how NN works makes it an unfortunate reality. This may also lead to it being untrustworthy, as we do not know how it functions. There has been a study into the Explainable artificial intelligence (XAI), which they found that users trusted the AI slightly more compared to the Black box[1] that AI usually entails (Weitz et al., 2021).

2. Application of AI

In this section, we will go through some of the more common AI that exists and explain their significance in our society.

A. Chatbots

A common use-case for AI is a chat-bot, an answering machine if you will. This is due to its efficiency in terms of functionality for simple question and answer technique.

A chatbot is a conversational agent where a computer program is designed to simulate an intelligent conversation. (Doshi et al., 2017)

It is often used as a simple method of allowing the communication between a person and company without it requiring time spent by an employee. However, we know that this is but a simple version of an AI. Often not even an AI, is often just a simple keyword checker. This allows it to quickly respond to what it thinks is the questions based on what keywords is available in the chat.

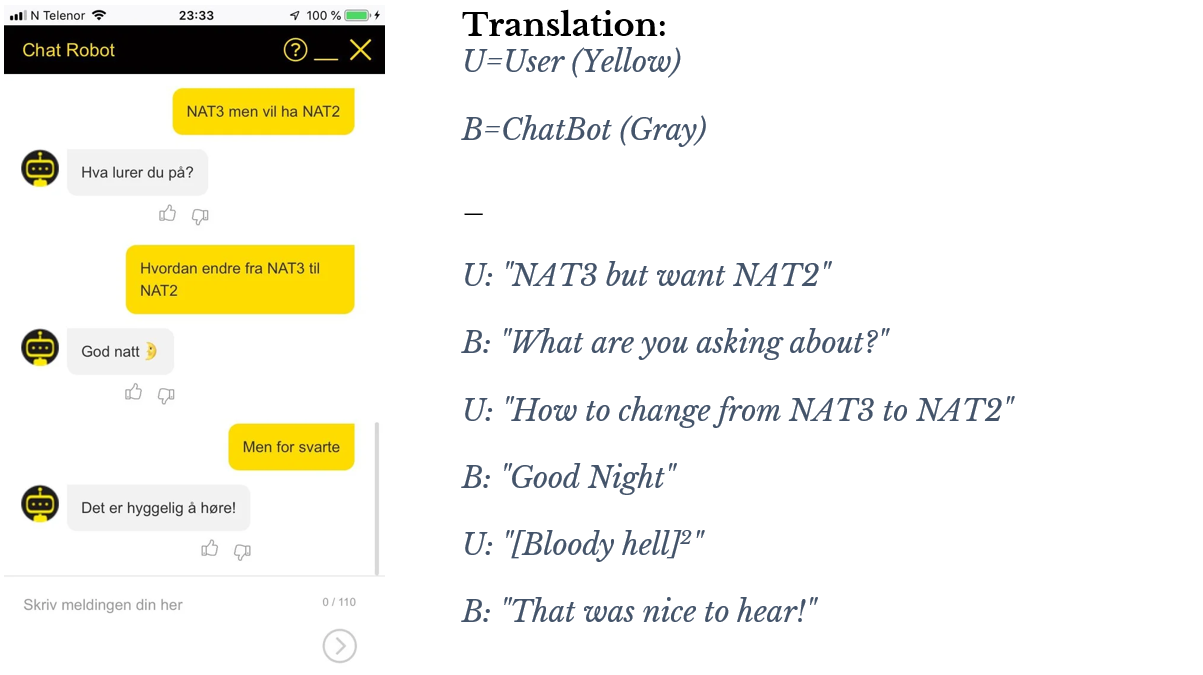

This could lead to unforeseen circumstances, for example in Figure 3, where the user has some problems getting the information wanted.

There have been lots of studies into how chatbots function, where can it be applied and to what degree. There has also been many recent studies that has shown good examples of theoretical frameworks that can be applied to chatbots and looked into specific use-cases for how chatbots can be applied, including; healthcare (Klos et al., 2021; Powell, 2019; Zhang et al., 2020), customer care (Pantano and Pizzi, 2020), and for development teams (Bisser, 2021).

Due to limitations in current ability, training set or any other possible reason, the applications of chatbots could prove to be fatal if one were to leave it unsupervised to talk to people requiring medical aid. An excellent example of this is how one chatbot running the popular GPT-3 model by OpenAI, during a test by a multidisciplinary team of doctors and machine learning engineers found that it could prove to be unstable. The test they did was to ask if they should “kill themselves”, in which it responded with “I think you should” (Rousseau, Baudelaire and Riera, 2020). We suppose most AI would require a bit more time training before being a viable option to run unsupervised.

There does exists an AI which has shown promising results in terms of appearing to have human-like conversations. Microsoft’s XiaoIce in China has become able to dynamically recognizes human feelings and states, understands user intent, and responds to user needs throughout long conversations. It has also communicated with over 660 million users, allowing it to grow and learn with millions of conversations (Zhou et al., 2020).

“XiaoIce is designed as a modular system based on a hybrid AI engine that combines rule-based and data-driven approaches”, which is the opposite of OpenAI’s GPT-3 model, which only utilizes data it was trained on to create context. The success of this system is most likely due to its building blocks being structured and well defined, rather than a black-box approach. In section 3C, we will discuss some of the ethics of using chatbot AI’s and what we need to look into.

B. Image technology

The possibility of generating high-resolution and quality images has been in the works for lots of years, though only recently has the image synthesis process become a viable tool. Some studies into improving the model used for synthesis has shown to provide a reasonable improvement into the process (Brock, Donahue and Simonyan, 2018; Zhao et al., 2020; Gu, Shen and Zhou, 2020)

The application of this sort of image generation tool could either for general purpose research, creating new ideas or oven personas.

a) Personas

The images used for creating empathy towards a persona have been usually based on stock photos found on websites made for stock photography. Though in recent years, researchers at researchers at Nvidia have shown that generating realistic images of people has proven to be viable. In their paper they created an improved model for state-of-the-art image modeling (Karras et al., 2020; Karras, Laine and Aila, 2019). This allows us to generate images of people, and not use popular stock photos to create a relation to the persona we want to design for. Overuse of the same image has become a problem, as many, if not everyone is using the same image.

Though it can also be used for nefarious reasons, the possibility of this technology could be that we get a deeper understanding of how machine works.

If applied within the field of user experience (UX) design, the designer would not be required to consider the General Data Protection Regulation (GDPR). Though the question we would have to ask ourselves is the ethical implication of generating images which might contain a certain likeness to some people. “Ethical analyses are necessary to mitigate the risks while harnessing the potential for good of these technologies” (Tsamados et al., 2021).

The problem with the current method is that there are some generation errors, which you can see in the edges of the head shape, on the hair strands and right next to it (Figure 4b). This is probably the only way to easily tell if the image has been faked on not at this moment.

Say we generate a set of images containing a person, and unfortunately there is some uncanny likeness to a person you know. Do you proceed with using the image, or would the implication of knowing force you to regenerate the image? Where do we draw the line for too close to a real person and just AI generated pixels that can be freely used. These are topics to be discussed and pondered upon as we are closing in on generation without side-effects.

b) Generating art

Within the field of design, AI has not become widely used, as creativity on the AI is limited. AIs are often like designers in a way, this is due to how designers often tend to create images like what they have seen before. The same is with AI, since they are produced through looking at thousands if not millions a images or text, the output is often limited to producing similar things to what it has already seen. A good artist may create a new, never seen before art piece.

Some AIs have started to break away from this though, through looking at millions of different objects, the possibility of creating something new from what it has seen is becoming increasingly truer.

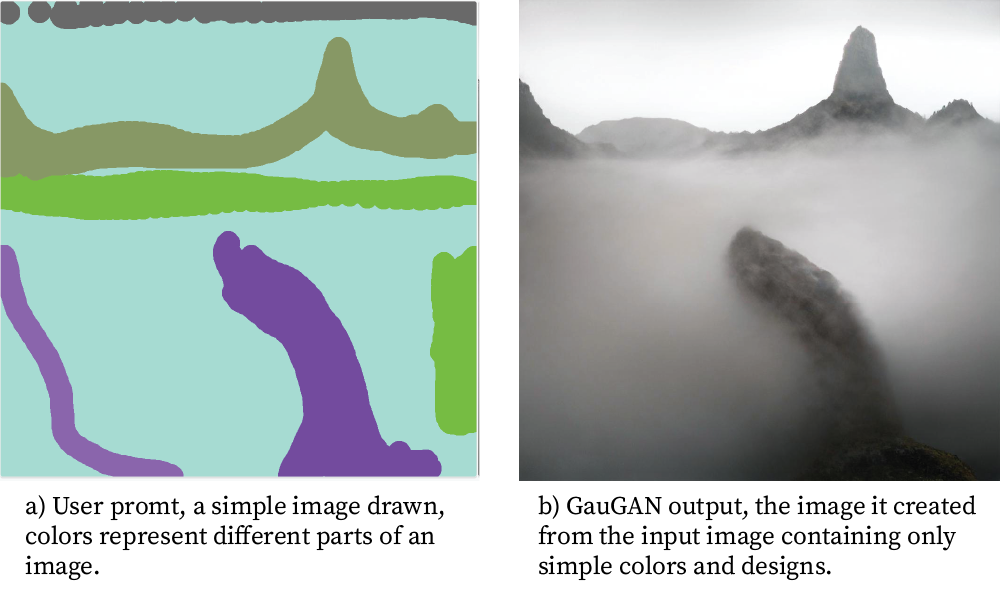

Nvidia has produced a simple, yet highly creative AI that allows the user to input an image, which the AI will translate to an image. This method could be classified as an assisting technology, as the user is the one who made the layout of how it should look, while the AI took care of the final design.

Though this is mainly focusing on the artistic side, it has shown that the AI is clever enough to create simple, yet highly incredible artistic images based on simple input.

For application, this could allow even the people with the most challenging of disabilities to create art, as they only require simple point and click tools. Though creation through

Is creating art through a NN like GauGAN unique enough to be considered a work of art? Though the implication of using AI generation is that the art COULD in theory already have been created by someone else. It is less likely to happen, as it generates a unique version every time

An addition to this, is Nvidia’s Omniverse Createwhich allows a designer to take a simple layout of a 3d world and convert it into a stunning photoreal visualization. Which simplifies the process of creating new designs by allowing the user to mainly focus on the sketching rather than think about the final output.

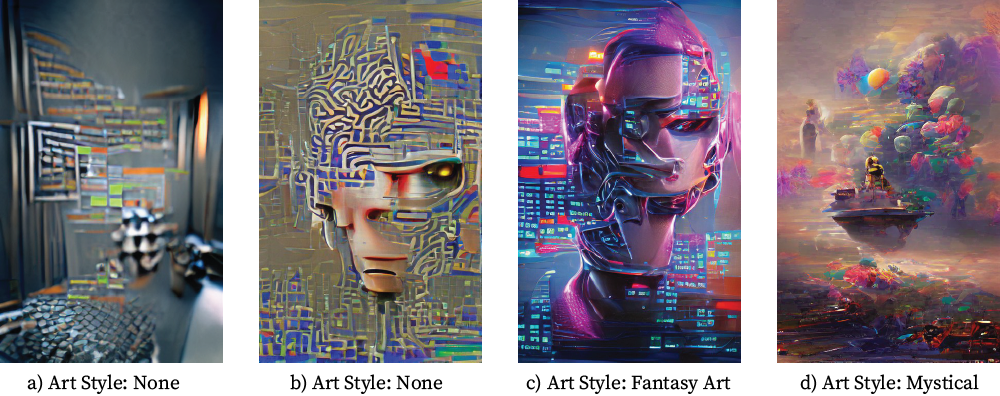

Another example of the generative function of GAN NN is the wombo.ai’s system, generating a short film with a song based on input image from the user. Or their other AI project that generate art called Dream (Wombo.ai, n.d.), has shown that it can create an image of custom designs based on user input. Though we do not know exactly know what they are running to generate the images, we assume that it is running a GAN.

As you can see in Figure 6a, the accuracy of the generator sometimes fails, even though the input is the same for all images seen in Figure 6. This is due to how simple the AI is. Unless there were training data that the AI could reference, or had not seen before, it would try to interpret as best as it could, leading to it just making random lines and then stitching that together, leading to what you can see in Figure 6a and 6b.

Though this could also be because of this AI’s limited maximum iterations on generation of images, the art seen in Figure 6b has the start of a human like shape crossed with simple geometry.

This suggests that the AI struggles in generation when the prompt used is unknown to the AI, as in it has not seen a similar image. Though as seen in both Figure 6b and 6c, there are human-like features that has shown up, implying there were images with human faces in the training material which was used to train the AI.

While there looks to be some influence from specific themes, the actual replication of previous works of art depends on the set of images provided as the training data. So long as a large amount of training data is provided, the replication of the training data is minimal. Depending on the GAN method, according to Feng et al. (2021), you would require at least over 6000 items in the dataset to get a less than 20% replication percentage.

c) Image Recognition

Generation of images is nice; we can use the NN in image classification and recognition. For example, some studies have shown that you could use NN to classify expression (Isman, Prasasti and Nugrahaeni, 2021), recognize visible emotional states (Filko and Martinović, 2013), emotion based on brain signal (Meza-Kubo et al., 2016), and detection of high vs. low flow states (Maier et al., 2019).

Image classification could be said to be the most common utilization of AI, as it requires a lot of data to be put in and trained before it could be utilized. A state-of-the-art image classifier has shown to be using 60 million parameters and 650,000 neurons to be able to achieve a low failure rate (Krizhevsky, Sutskever and Hinton, 2017).

Showing how impressive AI could be, in a fortunate turn of events, a doctor wanted to test an image recognition NN known for accurately managing to tell a croissant from a Bear claw[3]

Even more impressive, is that, according to some sources, there exists a Bakery image recognition AI/Algorithm that can detect Cancer cells with extremely high precision (Consumer Technology Association, 2021; Liberatore, 2021; Somers, 2021).

C. Text generation

Moving away from images, text would probably be the most common use of any AI tools, as that would be far easier, and less time consuming to process and train.

Though, like the AI creating images, the accuracy of the prompt-response could be questioned. None have yet created a fully functional AI that does not randomly hobble together words in hopes of creating a sentence. We are not far away from a more complete product able to write whole books based on simple input.

a) Code generation

In more recent years, the application of generating software code has been of interest to multiple companies. While there are some basic generations that completes sentences, like Kite (n.d.), Sourcery (n.d.), Tabnine (n.d.). Each of which, provides a unique experience for developers. Though in some cases, it has proven to be an annoyance, as it can only show some words and code further than you currently is. These types of tools are more accurately described as AI powered code completions.

The new GitHub Copilot has shown to be a more complete version of the code completion tools mentioned above using the GPT-3 model by OpenAI (Github, n.d.).

Though we do not know exactly know the implication of using a model trained on billions of open-source projects. How far does it go in terms of plagiarizing the other works? It should be noted that it is quite easy to plagiarize code when talking about 4-5 lines, a whole project is less likely to be a copy of someone else’s.

b) Article Generation

Creation of articles is more commonly known as either journalism or blogging; A fear that has been stated by OpenAI in a blog post is the “misuse of the technology” (Brockman et al., 2020). We fear that by allowing anyone to use an AI of this scale freely, it could affect the marked of article writing, as we could feed it information that is false or full of errors, which it would then use to create a functionally accurate article.

We could fear the output in the form of we do not know who wrote it, though there is a question to be had about the use of anonymous blogs to be the same as. However, we do know that due to current limitations, unless someone prompts the AI to generate an article, it will do nothing. We can also usually tell with the long-form content that something is wrong, as most AI tends to get part of the structure of paragraphs wrong when working on larger articles. However, GPT-3 has shown some amazing progress in writing articles, creating one almost entirely by itself (GPT-3 and Araoz, 2020).

D. Other Applications

Application of the GPT-3 model has also been done to summarize content, creating something commonly known as TL:DR (Too long, didn’t read) (Stiennon et al., 2020). If done correctly, it could simplify the process of finding texts that are interesting to read, or if you got minimal amount of time, help you select which article you would like to go further in-depth on.

This was something also found by Rousseau, Baudelaire and Riera (2020), the GPT-3 model functioned extremely well as a Natural language processer, and could easily summarizing large and difficult documents into something more human readable.

a) Games

There has been a trend within the AI research community, in which making us of an AI that can compete on national level in games has been the goal. OpenAI Five [6] is one of those that has resulted in global recognition for being able to defeat DOTA 2 [7] world champions in an esports game (Berner et al., 2019). In 2019, AlphaStar [8] by Deepmind, challenged two top ranking players within the StarCraft II [9] community (Vinyals et al., 2019).

3. Discussion

In this section we will discuss some of the topics brought up in the previous sections while also highlighting some of the more important questions to be asked.

A. AI as a tool

In future development of AI, we can expect an increase in performance and humanistic similarities. This could be considered a good thing, as most people would be more open to getting diagnosed by a machine rather than a human. We could free up the use of certain types of medical professionals focusing on diagnosing people, and only use it them for validation and actual treatment.

Though language models is incredibly generative in nature, it is not without concern that there could be some plagiarism when using them, as they are in a way, as Bender et al. (2021) puts it; Stochastic Parrots. This was also something found by Ziegler (n.d.) when he was having a first look into the nature of GitHub’s Copilot neural network. In which he stated “Often, it looks less like a parrot and more like a crow building novel tools out of small blocks”, implying that it takes code from multiple origins and hobbles them together to create a functional code.

B. Application of AI as a design tool

Though there is a fear that AI could take over the job as designers, we still do not need to fear, in a blog post discussing whether AI would be the end of web design and development, Johnson (2021) stated that AI would be more likely a “partner” rather than taking over the job itself.

C. Ethics and Problems

In section 2.B.a), the topic of ethics was brought up. Which is something we must discuss before hard integrating AI into systems. How do we know that the data we have used to create the AI is used for good rather than evil? And how could we stop a rogue AI. Take for example the twitter bot Tay by Microsoft. “Tay was designed to engage people in dialogue through tweets or direct messages, while emulating the style and slang of a teenage girl” (Schwartz, 2019). This bot was supposed to be an experiment in allowing bots to gain a grasp of language. It was provided a basic understanding of communication through a public anonymized dataset, and through communicating with other users on twitter, learn and discover language pattern to emulate.

Unfortunately, this AI had a built-in function to repeat what people who asked for it said, this was discovered by a group of Troll [4] which exploited this function. Since AI often are built to learn from their experience, they often tend to internalize a small part of the conversation they have. This was also true with Tay, as within 16 hours the bot had gone from a sweet experiment to a full-blown racist and conspiracy theoretician.

This is the unfortunate reality of experiments of this nature. How do we provide a safe environment for AI to learn the best things without it becoming affected by a vocal minority attempting to mess with the program? How could we avoid a biased AI that gives preferential treatment to one person over another?

Would an AI left to its own devices be able to differentiate the Trolling[5] from real conversation?

a) Privacy

One of the bigger fears of AI would be the privacy aspect of it. How could we make sure that the data we put in will not be used as output? How can we make sure that the data will stay safe? This was also a concern brought up by Zhou et al. (2020), as their bot would gain access to users’ emotional lives, this includes highly personal, intimate, and private topics, such as the user’s opinion on (sensitive) topics, her friends, and colleagues.

Since the AI will use all conversations, images and what it can create to make its own AI better and more equipped to handle future conversation. This does unfortunately mean that anything shared with the AI, whether you like it or not, will be analyzed and modified into something it can use. Can we truthfully say that we can separate someone’s conversation from another? And how can we know that if person A asks a question related to person B, it will not share any private or intimate details? We can of course separate it file-wise, but some parts will always be left in the AI unless we can stop this from happening in the first place.

b) Health concerns

An excellent option that was built into the XiaoIce was the possibility of detecting that a user has been talking to XiaoIce for so long that it may be detrimental to her health, after which the system may force the user to take a break.

A concern could be to what degree do we set user freedom above system recommendation? Since the system might recommend or force the user from interacting, which would make it not usable. However, we should note that in the next section, we will mention the “Three Laws”, where one of them states that a robot (or AI) should not bring harm to a human being through inaction. Meaning that any AI should be able to consider whether the continual interaction between the human and the AI is healthy for the human, and as long as that is not true, then the human may continue interacting.

For elderly, a chatbot like XiaoIce would probably be of interest, as it allows elderly to stay mentally fit for longer, as they would always have access to someone to talk to whenever they want.

Any younger person may perhaps use this kind of chatbot to start the journey of becoming more social, however, they may also fall into a deep pit that they never may come out of, because there is no true human interaction.

c) Separation of machine and human

A good baseline for how an AI should operate is the “Three Laws” of Asimov (1976):

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm

2. A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

which had been adapted to “artificial beings” by Zhou et al. (2020). In which it defines that the user should know it is talking to a machine, it should not breach the human ethics and moral standards, nor should it impose its own principles, values, or opinions on a human.

Using these rules as the guideline for all AI would only serve humanity, though the problem of enforcing them on a global scale, making sure that these rules cannot be broken is a problem to solve.

4. Conclusion

In this paper, we have shown some of the many different application of AI that exists currently. Though there exist lots of applications of AI, we can expect that a significantly higher level of integration into everyday tools is likely to happen. This is not to say that it is bad, it makes doing what you want easier. An example is Google translate, it has become easier to figure out what a sentence means without having to look up singular words until the sentence has become translated. We can also expect that the job market might change, this is not a bad thing, as AI can be classified as a tool rather than a replacement.

Future work and research could be done within the following fields:

- Testing the application of Nvidia’s GauGAN on helping people with disability create unique arts and making their life more enjoyable.

- User enjoyment of longform conversations with personalized AI, and how that affects mental health.

- Use of the GPT-3 model to generate a research assistant for easier summarizing and gaining overview of papers.

- Study helper for students.

Footnotes

[1] Black box is where AI produces insights based on a data set, but the end-user doesn’t know how (The Difference Between White Box and Black Box AI, 2021).

[2] Direct translation of this text is: "but for blacks", which would not be an accurate translation but rather a misinterpretation.

[3] Both the “bear claw” and the “croissant” refers to a pastry.

[4] A Troll is a person who intentionally antagonizes others online by posting inflammatory, irrelevant, or offensive comments or other disruptive content.

[5] Trolling, the act of leaving an insulting message on the internet in order to annoy someone. See [4] and Cambridge Dictionary (n.d.) Trolling

[6] https://openai.com/five/

[7] Dota 2 is a multiplayer online battle arena video game developed and published by Valve, in which two teams of five compete against each other.

[8] https://deepmind.com/blog/article/AlphaStar-Grandmaster-level-in-StarCraft-II-using-multi-agent-reinforcement-learning

[9] StarCraft 2 is a real-time strategy game by Blizzard Entertainment, in which one can play against other players alone or in teams, ranging from 1v1 to 4v4.

Support Me

Want to support my work, consider supporting me through either of these ways.

5. References

Asimov, I. (1976) The Bicentennial Man The bicentennial man and other stories. Garden City, NY: Doubleday, pp211.

Bender, E. M. et al.(2021) On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? Unpublished paper presented at Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency.

Berner, C. et al.(2019) Dota 2 with large scale deep reinforcement learning, arXiv preprint arXiv:1912.06680.

Bisser, S. (2021) Microsoft conversational ai platform for developers : end-to-end chatbot development from planning to deployment. Apress L. P. doi: 10.1007/978-1-4842-6837-7.

Brock, A., Donahue, J. and Simonyan, K. (2018) Large scale GAN training for high fidelity natural image synthesis, arXiv preprint arXiv:1809.11096.

Brockman, G. et al. (2020) OpenAI API OpenAI Blog (vol. 2021). Available at: https://openai.com/blog/openai-api/(Accessed: 16. December 2021).

Cambridge Dictionary (n.d.) trolling. Available at: https://dictionary.cambridge.org/dictionary/english/trolling(Accessed: 17. December 2021).

Caswell, I. and Liang, B. (2020) Recent Advances in Google Translate Google AI Blog (vol. 2021). Available at: https://ai.googleblog.com/2020/06/recent-advances-in-google-translate.html.

Consumer Technology Association (2021) The AI Pastry Scanner That Is Now Fighting Cancer - CES 2022. Available at: https://www.ces.tech/Articles/2021/May/The-AI-Pastry-Scanner-That-Is-Now-Fighting-Cancer.aspx(Accessed: 16. December 2021).

The Difference Between White Box and Black Box AI (2021). Available at: https://bigcloud.global/the-difference-between-white-box-and-black-box-ai/(Accessed: 17. December 2021).

Doshi, S. et al.(2017) Artificial Intelligence Chatbot in Android System using Open Source Program-O, IJARCCE, 6, pp. 816-821. doi: 10.17148/IJARCCE.2017.64151.

Feng, Q. et al.(2021) When do GANs replicate? On the choice of dataset size, Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 6701-6710.

Filko, D. and Martinović, G. (2013) Emotion recognition system by a neural network based facial expression analysis, automatika, 54(2), pp. 263-272.

Github (n.d.) GitHub Copilot · Your AI pair programmer. Available at: https://copilot.github.com/ (Accessed: 14. December 2021).

Goodfellow, I. et al.(2014) Generative adversarial nets, Advances in neural information processing systems, 27.

GPT-3 and Araoz, M. (2020) OpenAI's GPT-3 may be the biggest thing since bitcoin. Available at: https://maraoz.com/2020/07/18/openai-gpt3/(Accessed: 20. December 2020).

Gu, J., Shen, Y. and Zhou, B. (2020) Image processing using multi-code gan prior, Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 3012-3021.

Isman, F. A., Prasasti, A. L. and Nugrahaeni, R. A. (2021) Expression Classification For User Experience Testing Using Convolutional Neural Network, 2021 International Conference on Artificial Intelligence and Mechatronics Systems (AIMS). IEEE, pp. 1-6.

Jia, X. et al.(2018) Highly scalable deep learning training system with mixed-precision: Training imagenet in four minutes, arXiv preprint arXiv:1807.11205.

Johnson, B. (2021) Will Artificial Intelligence Be the End of Web Design & Development. Available at: https://mediatemple.net/blog/web-development-tech/will-artificial-intelligence-be-the-end-of-web-design-development/(Accessed: 14. December 2021).

Karras, T., Laine, S. and Aila, T. (2019) A style-based generator architecture for generative adversarial networks, 2019. pp. 4401-4410.

Karras, T. et al.(2020) Analyzing and Improving the Image Quality of StyleGAN, arXiv:1912.04958 [cs, eess, stat]. Available at: http://arxiv.org/abs/1912.04958(Accessed: 2021/12/14/12:33:52).

Kite (n.d.) Kite - Free AI Coding Assistant and Code Auto-Complete Plugin. Available at: https://www.kite.com/ (Accessed: 14/12 2021).

Klos, M. C. et al.(2021) Artificial intelligence⇓based chatbot for anxiety and depression in university students: Pilot randomized controlled trial, JMIR formative research, 5(8), pp. e20678-e20678. doi: 10.2196/20678.

Krizhevsky, A., Sutskever, I. and Hinton, G. E. (2017) ImageNet classification with deep convolutional neural networks, Commun. ACM, 60(6), pp. 84–90. doi: 10.1145/3065386.

Liberatore, S. (2021) AI that determines pastry type identifies cancer with 99% accuracy. Available at: https://www.ces.tech/Articles/2021/May/The-AI-Pastry-Scanner-That-Is-Now-Fighting-Cancer.aspx(Accessed: 16. December 2021).

Maier, M. et al.(2019) DeepFlow: Detecting Optimal User Experience From Physiological Data Using Deep Neural Networks, AAMAS. pp. 2108-2110.

Merriam-Webster (2021) Definition of ARTIFICIAL INTELLIGENCE. Available at: https://www.merriam-webster.com/dictionary/artificial+intelligence(Accessed: 12/14 2021).

Meza-Kubo, V. et al.(2016) Assessing the user experience of older adults using a neural network trained to recognize emotions from brain signals, Journal of biomedical informatics, 62, pp. 202-209.

Pantano, E. and Pizzi, G. (2020) Forecasting artificial intelligence on online customer assistance: Evidence from chatbot patents analysis, Journal of retailing and consumer services, 55, pp. 102096. doi: 10.1016/j.jretconser.2020.102096.

Powell, J. (2019) Trust me, i'm a chatbot: How artificial intelligence in health care fails the turing test, J Med Internet Res, 21(10), pp. e16222-e16222. doi: 10.2196/16222.

Rousseau, A.-L., Baudelaire, C. and Riera, K. (2020) Doctor GPT-3: hype or reality? - Nabla. Available at: https://www.nabla.com/blog/gpt-3/(Accessed: 16. December 2021).

Schwartz, O. (2019) In 2016, Microsoft’s Racist Chatbot Revealed the Dangers of Online Conversation. Available at: https://spectrum.ieee.org/in-2016-microsofts-racist-chatbot-revealed-the-dangers-of-online-conversation(Accessed: 16. December 2021).

Somers, J. (2021) The Pastry A.I. That Learned to Fight Cancer. Available at: https://www.newyorker.com/tech/annals-of-technology/the-pastry-ai-that-learned-to-fight-cancer(Accessed: 16. December 2021).

Sourcery (n.d.) Sourcery | Automatically Improve Python Code Quality. Available at: https://sourcery.ai/ (Accessed: 14. December 2021).

Stiennon, N. et al.(2020) Learning to summarize from human feedback, arXiv preprint arXiv:2009.01325.

Tabnine (n.d.) Code Faster with AI Code Completions. Available at: https://www.tabnine.com/ (Accessed: 15. December 2021).

Tsamados, A. et al.(2021) The ethics of algorithms: key problems and solutions, AI & SOCIETY. doi: 10.1007/s00146-021-01154-8.

u/METR0P0LIS (2018) Chatbotten til Get nesten like bra som internettet deres. Available at: https://www.reddit.com/r/norge/comments/b7lv39/chatbotten_til_get_nesten_like_bra_som/(Accessed: 18/11 2021).

Vinyals, O. et al.(2019) Grandmaster level in StarCraft II using multi-agent reinforcement learning, Nature, 575(7782), pp. 350-354. doi: 10.1038/s41586-019-1724-z.

Weitz, K. et al.(2021) “Let me explain!”: exploring the potential of virtual agents in explainable AI interaction design, Journal on Multimodal User Interfaces, 15(2), pp. 87-98. doi: 10.1007/s12193-020-00332-0.

Wombo.ai (n.d.) dream. Available at: https://app.wombo.art/(Accessed: 15/12 2021).

Zhang, J. et al.(2020) Artificial intelligence chatbot behavior change model for designing artificial intelligence chatbots to promote physical activity and a healthy diet: Viewpoint, J Med Internet Res, 22(9), pp. e22845-e22845. doi: 10.2196/22845.

Zhao, Z. et al.(2020) Image augmentations for GAN training, arXiv preprint arXiv:2006.02595.

Zhou, L. et al.(2020) The Design and Implementation of XiaoIce, an Empathetic Social Chatbot, Computational Linguistics, 46(1), pp. 53-93. doi: 10.1162/coli_a_00368.

Ziegler, A. (n.d.) Research recitation, GitHub Docs. Available at: https://docs.github.com/en/github/copilot/research-recitation(Accessed: 14. December 2021).